Integrations

MrScraper can integrate smoothly into any workflow.

At this point, you need to decide how you want to work with the data.

Some people choose to download the results and manually process them. However, in scenarios involving a large amount of data or real-time requirements, integration is necessary. You can add the results to a database, Google Sheet, or even send them by email.

Available integration options

There is an infinite number of integrations you can make with your scrapers. To provide flexibility and cover various scenarios, we currently have three available methods of integration:

Webhooks

Webhooks are great because they send information in real-time as soon as a scraper has finished.

Most people use webhooks to integrate the results of scraping with their own applications using their favorite programming language.

There are also no-code apps such as Zapier or Make.com that accept incoming webhooks and enable you to build powerful integrations.

API

In contrast to webhooks, the API works the other way around. Your application or no-code app can request information from MrScraper at any time by performing an API call.

This is mostly used when real-time is not needed or when we have to check information that is not sent by the webhooks.

No code apps

No-code apps are a combination of the previous two options, but made easy for non-technical people.

Apps such as Zapier or Make.com allow you to receive webhooks or perform API requests without having any programming knowledge.

Furthermore, MrScraper has an official Zapier app to make things even easier without having to write a single line of code.

Let's integrate our scraper

We will use the scraper we just created to add MrScraper blog posts to a Google Sheet.

Create a new Zap

Create or log in to your Zapier account and create a new Zap.

Add the MrScraper app

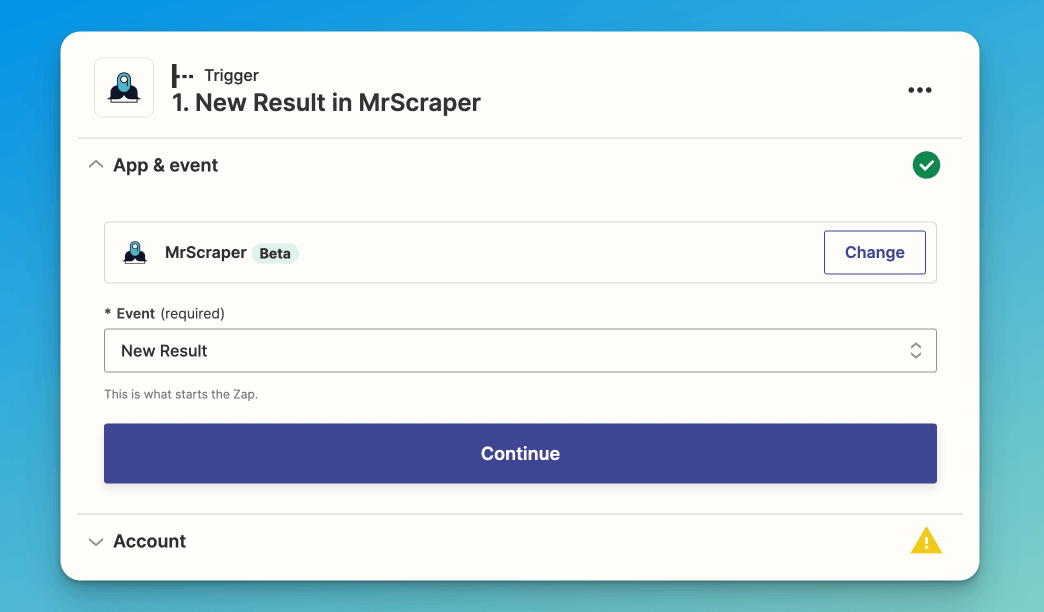

First of all, we'll need to create the step that triggers the new Zap. Select the MrScraper app and choose the "New Result" event.

Once MrScraper has a new scraping result ready, it will trigger this zap.

Convert the results to line items

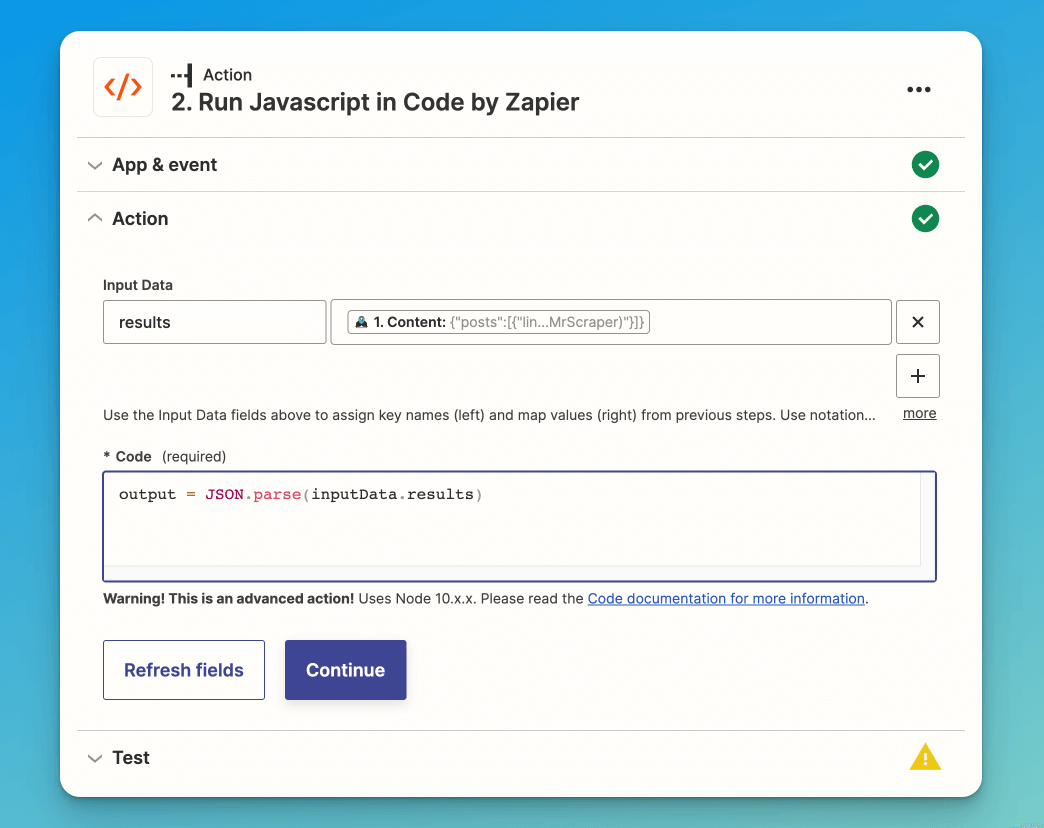

The next step is to transform the scraping results into a format that Zapier can understand, referred to as "line items."

We will utilize the Zapier code tool to generate a JSON object from the scraping results.

Add to the database

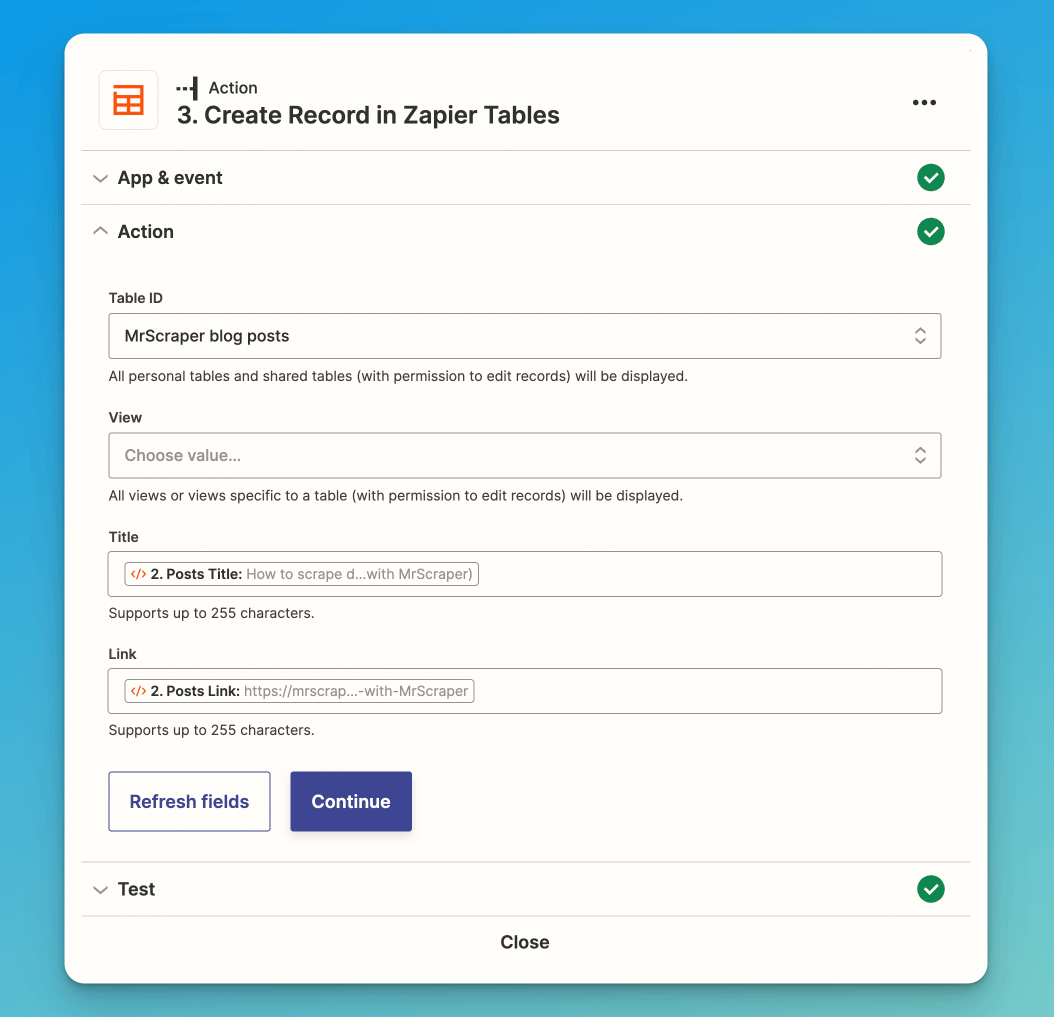

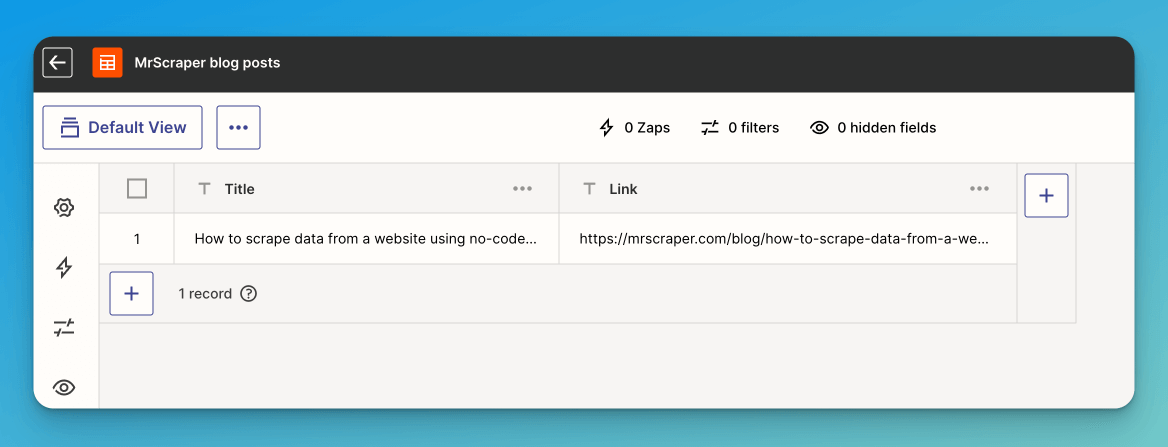

Lastly, we will save the posts to a database. In this example, we will utilize Zapier's new databases, which make it incredibly easy to store information.

Create a database, add the columns for title and link, and attach it to this final step.

And we are done!

Integrating your scraper is as simple as that. There are lots of possibilities the limit is your imagination!

What people think about

scraper

scraper

The mission to make data accessible to everyone is truly inspiring. With MrScraper, data scraping and automation are now easier than ever, giving users of all skill levels the ability to access valuable data. The AI-powered no-code tool simplifies the process, allowing you to extract data without needing technical skills. Plus, the integration with APIs and Zapier makes automation smooth and efficient, from data extraction to delivery.

I'm excited to see how MrScraper will change data access, making it simpler for businesses, researchers, and developers to unlock the full potential of their data. This tool can transform how we use data, saving time and resources while providing deeper insights.

Adnan Sher

Product Hunt user

This tool sounds fantastic! The white glove service being offered to everyone is incredibly generous. It's great to see such customer-focused support.

Harper Perez

Product Hunt user

MrScraper is a tool that helps you collect information from websites quickly and easily. Instead of fighting annoying captchas, MrScraper does the work for you. It can grab lots of data at once, saving you time and effort.

Jayesh Gohel

Product Hunt user

Now that I've set up and tested my first scraper, I'm really impressed. It was much easier than expected, and results worked out of the box, even on sites that are tough to scrape!

Kim Moser

Computer consultant

MrScraper sounds like an incredibly useful tool for anyone looking to gather data at scale without the frustration of captcha blockers. The ability to get and scrape any data you need efficiently and effectively is a game-changer.

Nicola Lanzillot

Product Hunt user

Support

Head over to our community where you can engage with us and our community directly.

Questions? Ask our team via live chat 24/5 or just poke us on our official Twitter or our founder. We're always happy to help.