Top 4 Methods to Find All URLs on a Domain

GuideOne of the most effective ways to find all URLs on a domain is by using MrScraper. With its powerful scraping capabilities, MrScraper makes it easy to extract all URLs from any website, even the most complex ones. Here's how you can do it:

In today's digital age, understanding the structure of a website is crucial for web developers, SEO experts, and digital marketers. Whether you're performing a site audit, analyzing competitors, or preparing for a site migration, identifying all URLs on a domain can provide valuable insights. This comprehensive guide will walk you through various methods to find all URLs on a domain, with a special focus on using MrScraper—the ultimate web scraping tool.

In today's digital age, understanding the structure of a website is crucial for web developers, SEO experts, and digital marketers. Whether you're performing a site audit, analyzing competitors, or preparing for a site migration, identifying all URLs on a domain can provide valuable insights. This comprehensive guide will walk you through various methods to find all URLs on a domain, with a special focus on using MrScraper—the ultimate web scraping tool.

Why Finding All URLs is Important

Before diving into the methods, it's essential to understand why finding all URLs on a domain is so important:

- SEO Audits: Uncover hidden pages, orphan pages, and ensure that all URLs are properly indexed.

- Content Inventory: Create a complete list of all content assets for repurposing, updating, or migration.

- Competitor Analysis: Analyze a competitor’s site structure to gain insights into their content strategy.

- Broken Link Check: Identify and fix broken links that could be hurting your SEO.

Method 1: Using MrScraper to Find All URLs on a Domain

One of the most effective ways to find all URLs on a domain is by using MrScraper. With its powerful scraping capabilities, MrScraper makes it easy to extract all URLs from any website, even the most complex ones. Here's how you can do it:

One of the most effective ways to find all URLs on a domain is by using MrScraper. With its powerful scraping capabilities, MrScraper makes it easy to extract all URLs from any website, even the most complex ones. Here's how you can do it:

-

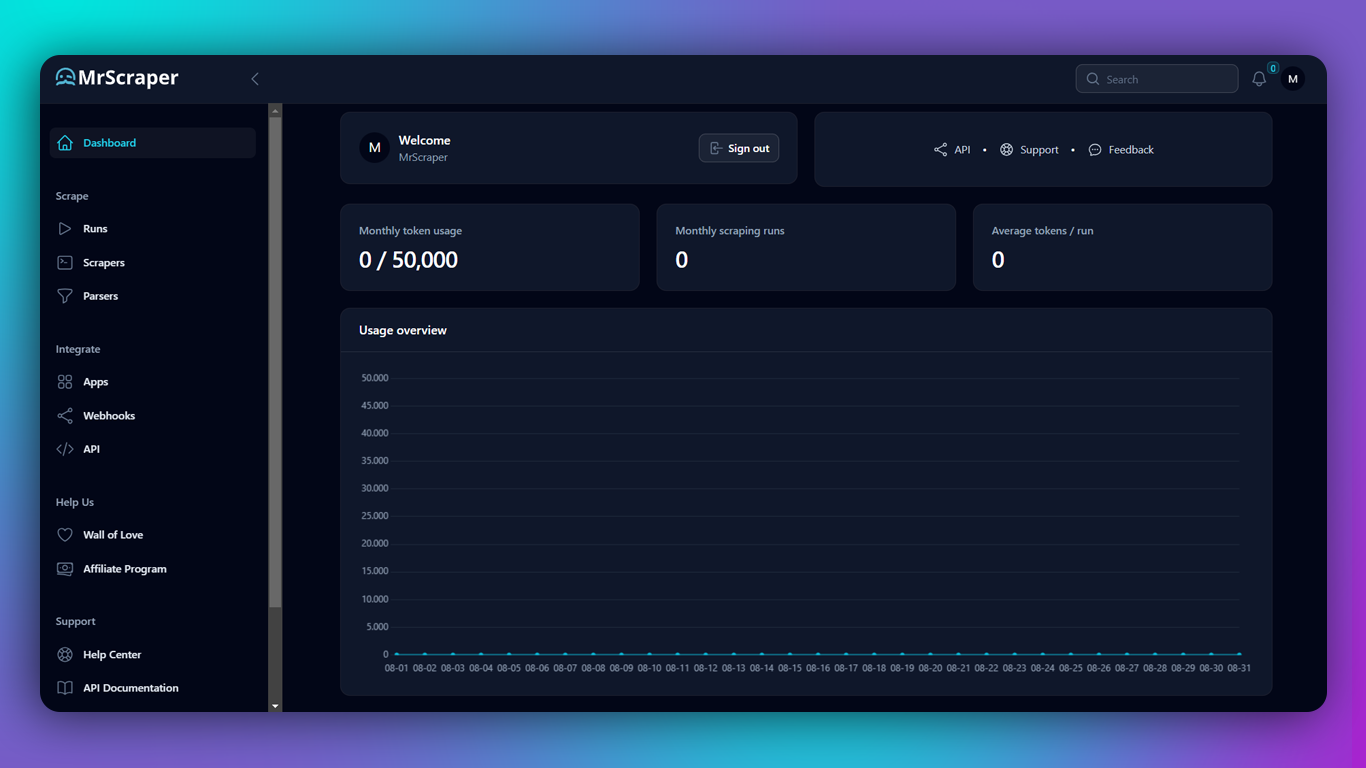

Sign Up for MrScraper: If you haven’t already, sign up for an account on MrScraper. The tool offers a user-friendly interface with a variety of features designed to meet your web scraping needs.

-

Create a New Project: Start by creating a new project in MrScraper. Enter the domain you want to scrape, and select the option to scrape all URLs.

-

Configure Scraping Settings: Customize your scraping settings based on your requirements. MrScraper allows you to define parameters such as depth level, URL filters, and more.

-

Run the Scraper: Once your settings are configured, run the scraper. MrScraper will crawl the entire domain and generate a comprehensive list of all URLs.

-

Export the Data: After the scraping is complete, you can export the list of URLs in various formats, such as CSV or JSON, for further analysis.

Why MrScraper? MrScraper stands out from other tools due to its AI-powered, no-code scraping features, which make it accessible even for non-technical users. Additionally, MrScraper’s seamless integration with workflows ensures that you can automate your scraping tasks and save valuable time.

Method 2: Using XML Sitemaps

Another common method to find all URLs on a domain is by accessing the site’s XML sitemap. XML sitemaps are usually located at https://www.example.com/sitemap.xml. Here’s how to use them:

-

Access the XML Sitemap: Navigate to the domain's XML sitemap. Most websites have a sitemap available at the root directory.

-

Extract URLs: The XML sitemap will contain a list of all indexed URLs. You can either manually copy these or use a tool like Python to parse the XML file.

For those interested in parsing XML files programmatically, you can refer to our previous blog post titled Parsing XML with Python: A Comprehensive Guide. This guide walks you through the steps to extract data from XML files using Python, making it a great resource for handling sitemaps.

Method 3: Google Search Console

If you have access to the domain’s Google Search Console, you can easily find a list of all URLs:

-

Log in to Google Search Console: Select the property corresponding to your domain.

-

Navigate to Coverage Report: Under the “Index” section, click on “Coverage.” Here, you’ll find all URLs that Google has indexed.

-

Export URLs: Google Search Console allows you to export this data, providing you with a comprehensive list of indexed URLs.

Method 4: Manual Crawling with Python

For those who prefer a more hands-on approach, you can use Python to manually crawl a website and extract all URLs. This method is more technical but offers complete control over the crawling process.

Here’s a basic Python script to get you started:

import requests

from bs4 import BeautifulSoup

from urllib.parse import urljoin

def find_urls(domain):

urls = set()

to_crawl = [domain]

while to_crawl:

url = to_crawl.pop(0)

try:

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

for link in soup.find_all('a', href=True):

full_url = urljoin(domain, link['href'])

if domain in full_url and full_url not in urls:

urls.add(full_url)

to_crawl.append(full_url)

except requests.exceptions.RequestException as e:

print(f"Failed to crawl {url}: {e}")

return urls

domain = 'https://www.example.com'

urls = find_urls(domain)

for url in urls:

print(url)

Explanation:

- requests: Used to fetch the content of each URL.

- BeautifulSoup: A powerful library for parsing HTML and XML documents.

- urljoin: Ensures that relative URLs are converted to absolute URLs.

This script will start from the homepage of the specified domain, crawl through all accessible links, and print out a list of all URLs it finds. You can save these URLs into a file or further process them according to your needs.

Conclusion

Finding all URLs on a domain is a vital task for anyone involved in web development, SEO, or digital marketing. Whether you’re using tool like MrScraper, analyzing XML sitemaps, or leveraging Google Search Console, each method offers unique benefits. If you prefer a hands-on approach, coding your own crawler with Python gives you full control over the process.

Find more insights here

.jpg)

How to Handle Timeouts in Python Requests

Learn how to handle timeouts in Python requests properly, including connect vs read timeouts, retrie...

.jpg)

What Is a Search Engine Rankings API and How It Powers Modern SEO

Learn what a Search Engine Rankings API is, how it works, key features, real use cases, and how it p...

How to Scrape Google Shopping: A Complete Guide to E-commerce Data Extraction

Google Shopping is one of the largest product discovery platforms online. It aggregates product list...