How to Use Mrscraper ScrapeGPT with Pagination: A Comparison with Manual Scraping

EngineeringThere are two main approaches to web scraping: manual coding with tools like Puppeteer and automated scraping using no-code platforms like Mrscraper ScrapeGPT. In this article, we’ll compare these two approaches using an example: scraping keyboard listings on eBay across multiple pages.

There are two main approaches to web scraping: manual coding with tools like Puppeteer and automated scraping using no-code platforms like Mrscraper ScrapeGPT. In this article, we’ll compare these two approaches using an example: scraping keyboard listings on eBay across multiple pages.

There are two main approaches to web scraping: manual coding with tools like Puppeteer and automated scraping using no-code platforms like Mrscraper ScrapeGPT. In this article, we’ll compare these two approaches using an example: scraping keyboard listings on eBay across multiple pages.

Manual Scraping with Puppeteer: A Coding-Intensive Approach

Puppeteer is a powerful Node.js library that allows you to control a headless browser for web scraping. Here’s how you can scrape eBay keyboard listings with Puppeteer.

Step 1: Setting Up Puppeteer

First, install Puppeteer by running:

npm install puppeteer

Then, create a script file, for example, scrapeKeyboards.js, and set up Puppeteer:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.ebay.com/sch/i.html?_from=R40&_trksid=p4432023.m570.l1313&_nkw=keyboard&_sacat=0');

const items = await page.evaluate(() => {

const results = [];

const listings = document.querySelectorAll('.s-item');

listings.forEach(listing => {

const title = listing.querySelector('.s-item__title')?.innerText;

const price = listing.querySelector('.s-item__price')?.innerText;

if (title && price) {

results.push({ title, price });

}

});

return results;

});

console.log(items);

await browser.close();

})();

Step 2: Scraping the First Page

This script scrapes the first page of eBay search results, gathering product titles and prices for each keyboard listing.

Step 3: Handling Pagination

To scrape multiple pages, modify the script to loop through the first three pages:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

let allItems = [];

for (let i = 1; i <= 3; i++) {

await page.goto(`https://www.ebay.com/sch/i.html?_nkw=keyboard&_sop=12&_ipg=50&_pgn=NULL`);

const items = await page.evaluate(() => {

const results = [];

const listings = document.querySelectorAll('.s-item');

listings.forEach(listing => {

const title = listing.querySelector('.s-item__title')?.innerText;

const price = listing.querySelector('.s-item__price')?.innerText;

if (title && price) {

results.push({ title, price });

}

});

return results;

});

allItems = allItems.concat(items);

}

console.log(allItems);

await browser.close();

})();

Step 4: Viewing the Results

Once the script completes, you’ll see the scraped data from all three pages in the console. You can modify the script to output the data to a file or database.

Summary

While Puppeteer allows for complete control over the scraping process, it requires writing and maintaining custom code. You need to manually handle pagination, data extraction, and browser automation, making it suitable for developers who need precise control but have time to invest in building and maintaining scripts.

Mrscraper ScrapeGPT: The Easy Way

For those looking for a more accessible solution, Mrscraper ScrapeGPT offers an effortless way to scrape data across multiple pages with no coding required.

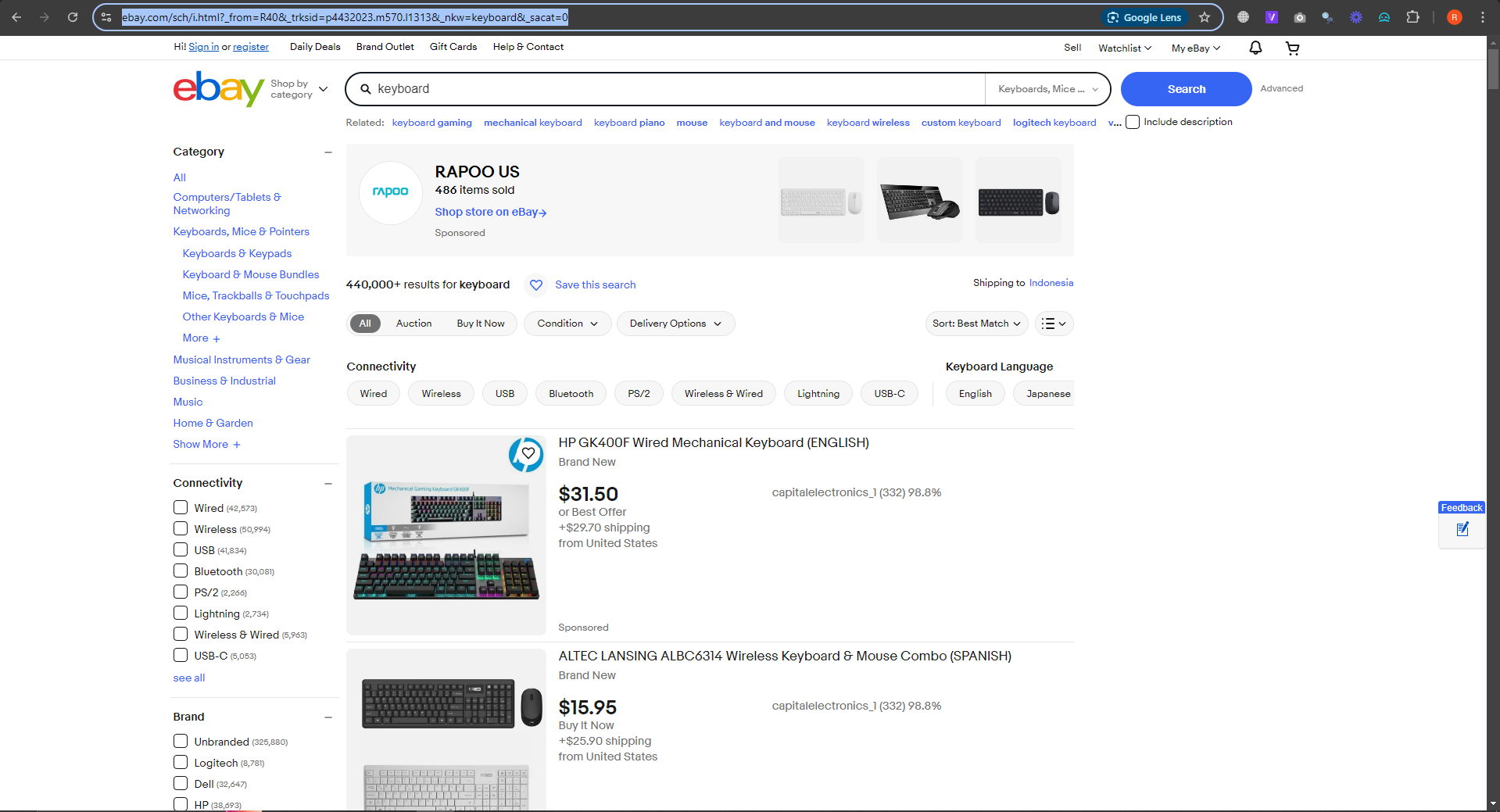

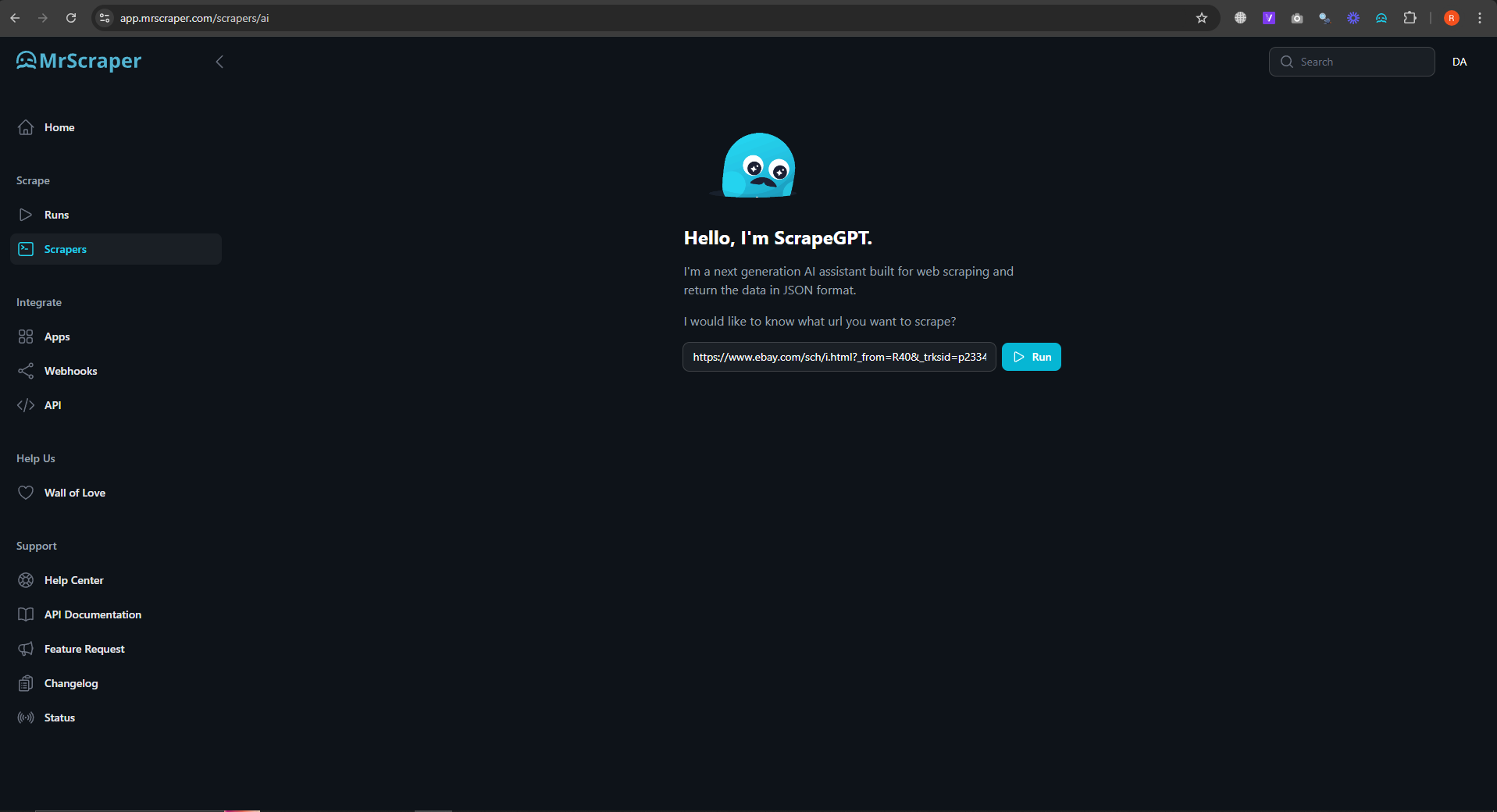

Step 1: Input the URL

Start by providing the eBay URL for the product you want to scrape. In this case, you’ll use the eBay search results page for keyboards. Simply paste the URL into Mrscraper’s input field.

Start by providing the eBay URL for the product you want to scrape. In this case, you’ll use the eBay search results page for keyboards. Simply paste the URL into Mrscraper’s input field.

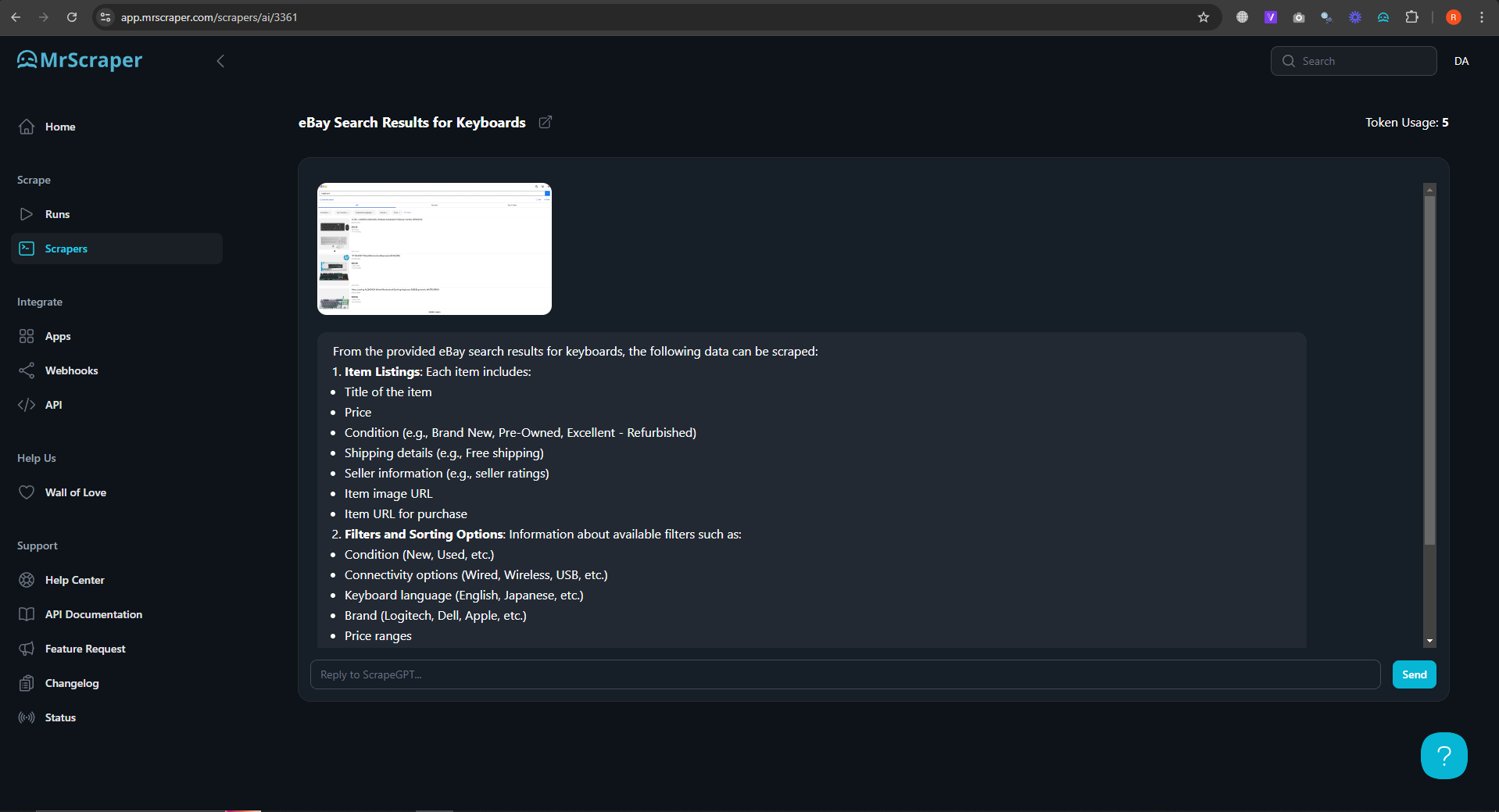

Step 2: Scraping the First Page

Once the URL is submitted, Mrscraper will automatically scrape data from the first page. The AI will intelligently extract the product names, prices, and other relevant information from the listings.

Once the URL is submitted, Mrscraper will automatically scrape data from the first page. The AI will intelligently extract the product names, prices, and other relevant information from the listings.

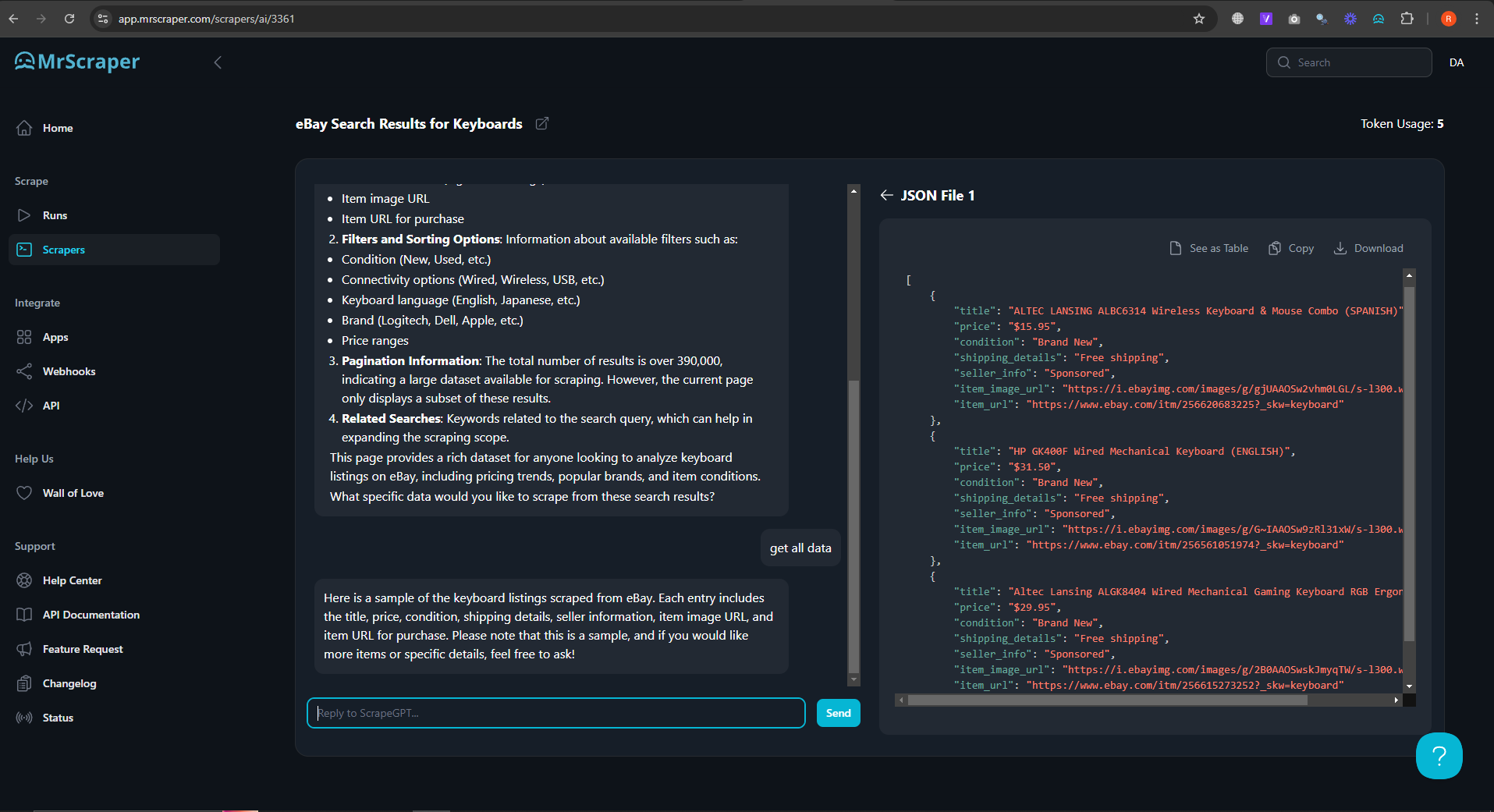

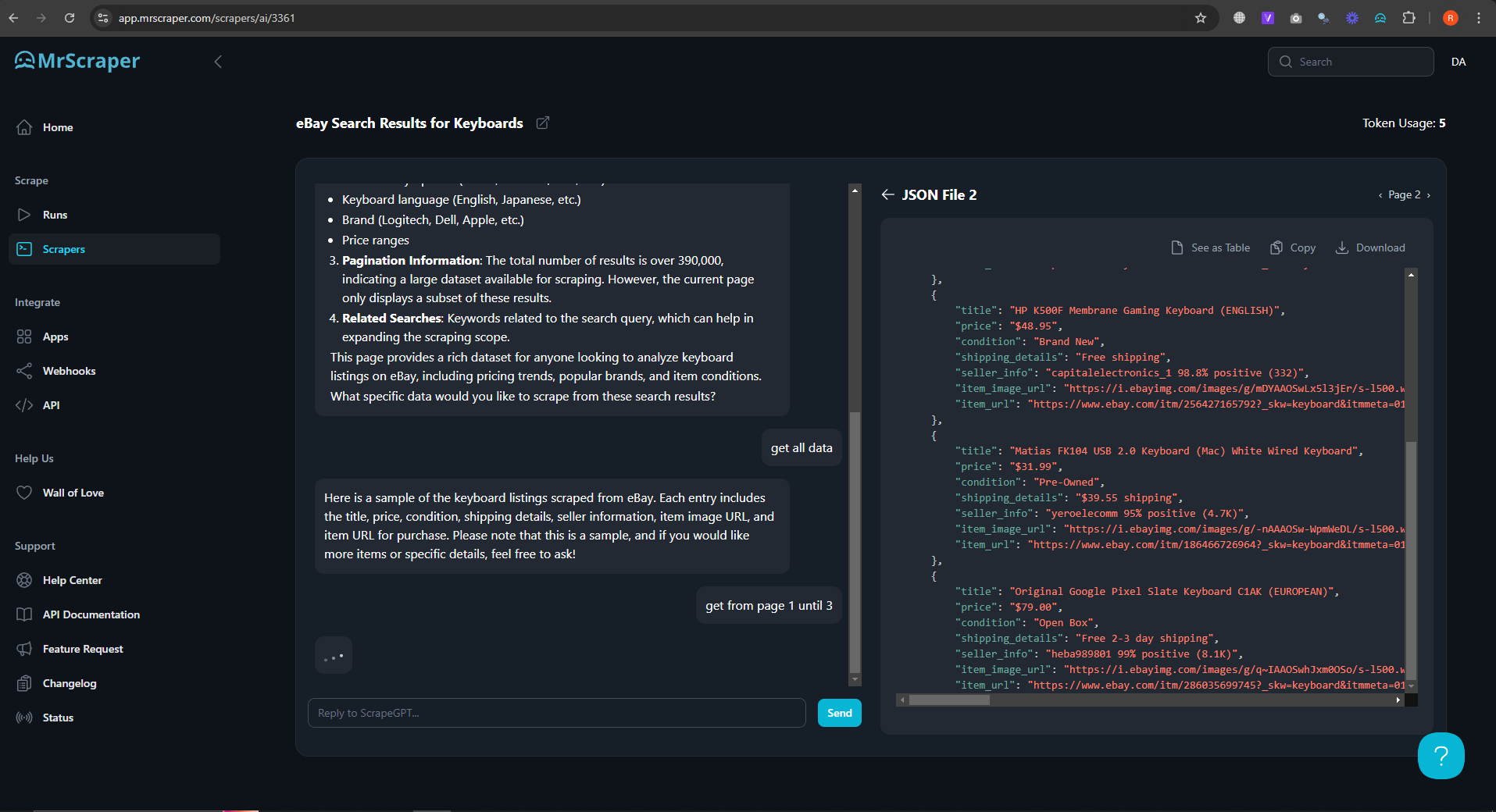

Step 3: Pagination with AI Prompt

To scrape data from additional pages, you just need to use a simple AI prompt. In this case, enter:

To scrape data from additional pages, you just need to use a simple AI prompt. In this case, enter:

"Get from page 1 until 3"

This prompt tells ScrapeGPT to extract data from the first three pages of search results.

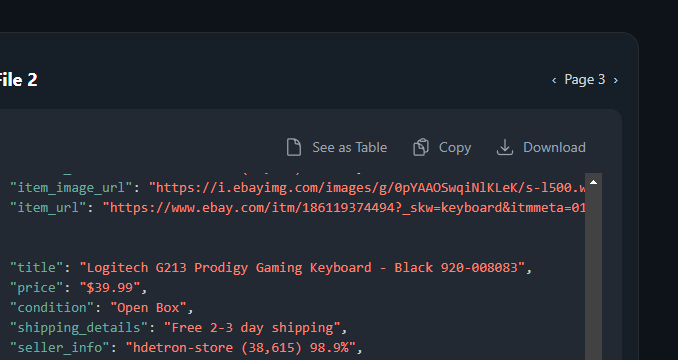

Step 4: View the Data with Pagination

Mrscraper will display the data from all three pages in a paginated format within its interface. You can easily navigate through the data, and all the results will be available for download.

Summary

With Mrscraper ScrapeGPT, scraping multiple pages is quick and doesn’t require any technical knowledge. You can scrape a large dataset using just a URL and a simple AI prompt, making it an ideal tool for non-developers or those who want a faster, hassle-free solution.

Comparing Puppeteer vs. Mrscraper ScrapeGPT

| Feature | Puppeteer | Mrscraper ScrapeGPT |

|---|---|---|

| Ease of Use | Requires scripting knowledge | No coding required |

| Pagination | Manual coding required | Handled with a simple AI prompt |

| Setup Time | Time-consuming | Almost instant |

| Customizability | Fully customizable | Limited to predefined options |

| Ideal For | Advanced users needing control | Quick and easy scraping |

Conclusion

Both Puppeteer and Mrscraper ScrapeGPT have their advantages depending on your needs. Puppeteer offers full control and flexibility but requires considerable effort and coding skills. Mrscraper, on the other hand, provides an intuitive, fast, and no-code solution that is perfect for scraping without technical overhead. For most users looking for quick and efficient scraping, Mrscraper ScrapeGPT is the ideal choice.

Find more insights here

Scrape Bing Search: A Practical Technical Guide

Bing scraping blocked? Discover how to bypass rate limits and bot detection to extract URLs, titles,...

FilterBypass: Unblocking Restricted Sites in a Simple Way

FilterBypass is a free web proxy that acts as an intermediary between your browser and the target si...

YouTube.com Unblocked: Accessing YouTube When It’s Restricted

Learn how to access YouTube unblocked on school, work, or regional networks. Explore VPNs, proxies,...